After our poster in EvoStar, GECCO 2019 saw another poster on evolution of Angry Birds structures, in this ocassion focused on the inclusion of the Box2D Physics simulation engine into the evolutionary algorithm to save using Science Birds, which improved evaluation of the structures that needed it by 100x.

The poster is minimalistic, with the intention of making it awesome.

//embedr.flickr.com/assets/client-code.js

//embedr.flickr.com/assets/client-code.js

Get data, code and the paper itself from our repository.

Archivo del Autor: jjmerelo

From computer engineering and computer science to artificial intelligence

Early June saw the celebration of the Interdisciplinary Summer School on Artificial Intelligence, with talks on diverse subjects that went from creativity in Twitter bots by Tony Veale to my own talk on computer science and engineering and how they can help AI.

The talk had two parts; the first focused on how AI is improving in performance and decreasing its energy footprint via design of specific chips, many of them based on the RISC-V open hardware architecture. The second part was mainly devoted to concurrent programming and how algorithms and whole applications must be changed to meet the challenges of creating cloud-native programs.

Our research group fully supports free software and open science, and the field of AI is ratcheting up its achievements by working on free stacks, from free hardware to the whole set of programs and services that support them. Understanding these stacks will help us design better algorithms and frameworks in the future.

Exploring concurrent evolutionary algorithms in Perl 6

Last April in Leipzig we presented our paper on concurrent evolutionary algorithms using Perl 6, the new, multiparadigm, language that includes Channel-based parallelism.

- If your university library allows it, download the paper, which is also available from GitHub in Knitr format, along with experimental data and scripts for processing it.

- The paper was presented as a poster. Check it out.

- A brief presentation was also made

- All the code is available from the

examplesrepository of this repo, obviously under a free license.

Perl 6 was proved to be a great testbed for concurrent evolutionary algorithms, using bioinspiration to design the algorithm. Still a lot to be done in that area, though.

Stateless evolutionary algorithms

Most algorithms keep some kind of state: global variable that holds the optimum, a counter of the number of evaluations, some context every piece algorithm must be aware of. However, this might not be the best when we want to create cloud-native algorithms, and it’s not in the case of cloudy evolutionary algorithms. There was a bit of that in GECCO, but as long as I was attending the Perl Conference in Glasgow, and I was using Perl, I kind of switched focus from the evolutionary part (but there was a bit of that too) to the language-design part and talked about evolutionary algorithms in Perl 6. The presentation is linked from the talk description.

Main problem is that you have to create dataflows that allow the algorithm to progress, as well as work efficiently in that kind of concurrent architecture, which is similar to the serverless architecture that is our eventual target.

We’ll be continuing this research in the workshop on engineering applications in Medellín, where my keynote will deal with this same topic.

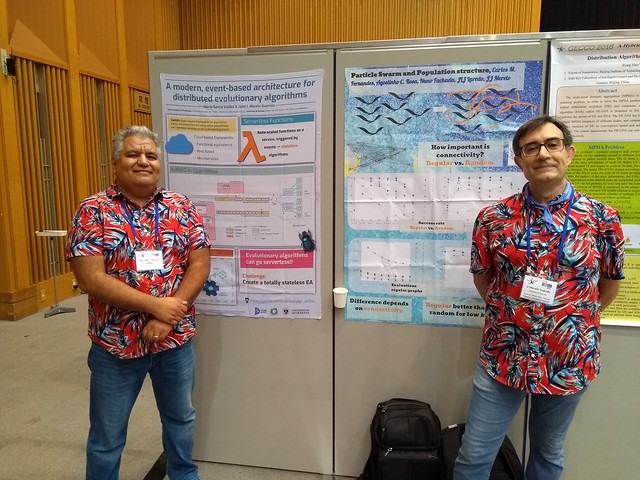

GECCO posters: modern evolutionary algorithms and particle swarm optimization methodologies

Besides the two papers we presented in GECCO workshops, our research group also had a couple of posters in the main track. Posters get a two-page publication that you can find if you want, but probably the posters themselves will be much more informative.

The first one, with Mario García, presented a new (almost) serverless architecture for evolutionary algorithms:

The second paper, with Juanlu García, Carlos Fernandes, present a structured population approach to avoid premature convergence problems with Particle Swarm Optimization algorithms

This last work shows that using a regular population structure is better for low degree of connectivity, but this degree is quite important and has a big influence on the results.

As usual, customers received beautiful origami after listening to the explanation. Visit us next time!

Self-organized criticality in code repositories

The GeNeura team is spread all over the world, and Dr. Juanlu Jiménez is in Le Havre as associate professor. He’s been so kind to invite us to a visit, and here’s the presentation we have made there.

Equipe Réseaux d’interactions et Intelligence Collective

During the last two weeks, we have been enjoying the visit of JJ Merelo at Ri2C team. On May 19th, he was delivering a seminar entitled Self-organized criticality in code repositories, of which you can find the abstract and the presentation next.

Abstract

It’s been known for some time that work in code repositories tend to self-organize and possibly in a self-organized state. What was not known is the conditions for this to happen, and what kind of description of the repository is needed to find these properties. In this talk we describe how a self-organized critical state has been found in a wide variety of repositories, including code or not.

The slides of the presentation are available at: https://jj.github.io/soc-code-repos/#/

A better TORCS driving controller presented in EvoStar 2018

Last year, we presented along with Mohammed Salem, from the university of Mascara, in Algeria, our TORCS driving controller. This controller effectively drives a simulated vehicle, considering input from its sensors, and deciding on a target speed and how to turn the steering wheel.

This year, in Evostar 2018 in Parma, we had again our paper accepted for the poster session, which took place in the incredible corridor to the right of these words. The poster included interactive elements, such as a small car used for demonstration on how the driver worked.

And it works really well, or at least better than the previous versions. The key element was the design of a new fitness function that includes damages, and also terms related to speed. Still some way to go; in the near future we will be posting our new results in this area.

The book of proceedings can be downloaded from Springer. Our paper is in page 342 and you can also download just the paper from here, but we do open science, so you can follow our writing process and download the paper from this GitHub repository too

Detección y predicción de flujos de personas y vehículos

En el marco del congreso CIMAS 21, que se celebrará en Granada, haré una presentación sobre las posibilidades de nuestro sistema de detección de tramas WiFi y Bluetooth, del que ya hemos hablado varias veces.

La presentación se centrará en los aspectos más analíticos de la plataforma, viendo las posibilidades que puede tener para un destino turístico con énfasis deportivo.

Early prediction of the outcome of Starcraft Games

As a result of Antonio Álvarez Caballero master’s thesis, we’ll be presenting tomorrow at the IJCCI 2017 conference a poster on the early prediction of Starcraft games.

The basic idea behind this line of research is to try and find a model of the game so that we can do fast fitness evaluation of strategies without playing the whole game, which can take up to 60 minutes. That way, we can optimize those strategies in an evolutionary algorithm and find the best ones.

In our usual open science style, paper and data are available in a repository.

Our conclusions say that we might be able to pull that off, using k-nearest neighbor algorithm. But we might have to investigate a bit further if we really want to find a model that gives us some insight about what makes a strategy a winner.

Dark clouds allow early prediction of heavy rain in Funchal, near where IJCCI is taking place

Self-organized criticality in software repositories, poster presented at ECAL 2017

The European Conference on Artificial Life or ECAL is not one of our usual suspects. Although we have attended from time to time, and even organized it back in 95 (yep, that is a real web page from 1995, minus the slate gray background), it is a conference I quite enjoy, together with other artificial life related conferences. Artificial life was quite the buzzword in the 90s, but nowadays with all the deep learning and AI stuff it has gone out of fashion. Last time I attended,ten years ago, it seemed more crowded. Be that as it may, I have presented a tutorial and a poster about our work on looking for critical state in software repositories. This the poster itself, and there is a link to the open access proceedings, although, as you know, all our papers are online and you can obtain that one (and a slew of other ones) from repository.

This is a line of research we have been working on for a year now, from this initial paper were we examined a single repository for the Moose Perl module. We are looking for patterns that allow us to say whether repositories are in a critical state or not. Being as they are completely artificial systems, engineering artefacts, looking for self organized criticality might seem like a lost cause. On the other hand, it really clicks with our own experience when writing a paper or anything, really. You write in long stretches, and then you do small sessions where you change a line or two.

This paper, which looks at all kinds of open source projects, from Docker to vue.js, looks at three different things: long distance correlations, free-scale behavior of changes, and a pink noise in the spectral density of the time series of changes. And we do find it, almost everywhere. Most big repos, with more than a few hundred commits, possess it, independently of their language or origin (hobbyist or company).

There is still a lot of work ahead. What are the main mechanisms for this self-organization? Are there any exceptions? That will have to wait until the next conference.