Our journal paper on the Sandpile Mutation operator for Genetic Algorithms is now available online: C.M. Fernandes, J.L.J. Laredo, A.C. Rosa, J.J. Merelo, “The sandpile mutation Genetic Algorithm: an investigation on the working mechanisms of a diversity-oriented and self-organized mutation operator for non-stationary functions“, Applied Intelligence, February 2013.

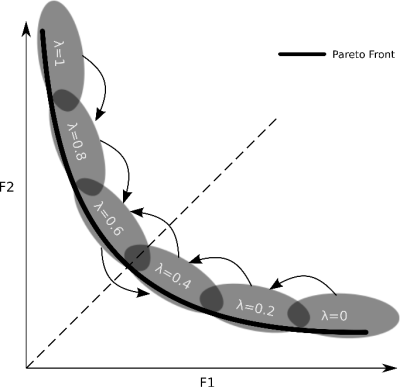

Abstract: This paper reports the investigation on the sandpile mutation, an unconventional mutation control scheme for binary Genetic Algorithms (GA) inspired by the Self-Organized Criticality (SOC) theory. The operator, which is based on a SOC system known as sandpile, is able to generate mutation rates that, unlike those given by other methods of parameter control, oscillate between low values and very intense mutations events. The distribution of the mutation rates suggests that the algorithm can be an efficient and yet simple and context-independent approach for the optimization of non-stationary fitness functions. This paper studies the mutation scheme of the algorithm and proposes a new strategy that optimizes is performance. The results also demonstrate the advantages of using the fitness distribution of the population for controlling the mutation. An extensive experimental setup compares the sandpile mutation GA (GGASM) with two state-of-the-art evolutionary approaches to non-stationary optimization and with the Hypermutation GA, a classical approach to dynamic problems. The results demonstrate that GGASM is able to improve the other algorithms in several dynamic environments. Furthermore, the proposed method does not increase the parameter set of traditional GAs. A study of the distribution of the mutation rates shows that the distribution depends on the type of problem and dynamics, meaning that the algorithm is able to self-regulate the mutation. The effects of the operator on the diversity of the population during the run are also investigated. Finally, a study on the effects of the topology of the sandpile mutation on its performance demonstrates that an alternative topology has minor effects on the performance.